Abstracts

Speakers' abstracts will appear here.

Definitions of Causality

David R. Cox, Nuffield College, Oxford

Some of the definitions of causality are reviewed and their implications for the interpretation of statistical analyses outlined.

Causal Models for Dynamic Treatments with Survival Outcomes

Vanessa Didelez, University of Bristol

A dynamic treatment is a set of rules that assigns for each time point when a treatment decision needs to be made what this should be, given the patient's history so far. An example in the context of HIV studies is "start treatment when CD4 count first drops below 600". An optimal dynamic treatment is such a set of rules that optimises a criterion reflecting better health for the patient. I will address issues of time-dependent confounding, idenitification, g-computation and marginal structural models with particular attention to the case of survival outcomes. Dynamic marginal structural models are becoming popular for HIV studies mentioned above. The particular difficulty with survival outcomes is that marginal and conditional models are typically not compatible. I will discuss and compare approaches, as well as the issue of simulating from structural models; this leads to additional insight and understanding of the models used in this context.

1807: Economic shocks, conflict and the slave trade

James Fenske, Economics, Oxford

Suppression of the slave trade after 1807 increased the incidence of conflict between Africans. We use geo-coded data on African conflicts to uncover a discontinuous increase in conflict after 1807 in areas affected by the slave trade. In West Africa, the slave trade declined. This empowered interests that rivaled existing authorities, and political leaders resorted to violence in order to maintain their influence. In West-Central and South-East Africa, slave exports increased after 1807 and were produced through violence. We validate our explanation using Southwestern Nigeria and Eastern South Africa as examples.

Model Selection in the Presence of Many Correlated Instruments

Benjamin Frot, Department of Statistics, Oxford

(Joint work with Luke Jostins and Gil McVean)

Mendelian Randomisation

is an instrumental variable method that uses genetic variants as

instruments. In most cases, a very large number of putative

instruments is available but little is known about their

validity. Pleiotropy designates such a common situation in which a

single variant has an effect on multiple outcomes through different

mechanisms : this is a violation of the conditional independence

assumption required in instrumental variable analyses. Another issue

raised by genetic data sets comes from the large number of observed

variables (e.g. ~23K in the case of gene networks) along with big

sample sizes. I will first reason about a simple example involving a

Gaussian graphical model with two instruments and two observed

variables in order to show how Bayesian methods can be leveraged to

deal with likelihood nonidentifiability and pleiotropy. I will then

extend this analysis to larger systems and introduce an MCMC algorithm

that allows a principled random walk on the space of possible directed

acyclic graphs. In this context, a sparsity principle can be used to

deal with pleiotropy.

Causation in Sociology as a Population Science

John Goldthorpe, Sociol Policy and Intervention, Oxford

I consider three understandings of causation found in sociology: (1) as robust dependence; (2) as consequential manipulation; and (3) as generative process. I discuss problems that arise with (1) and (2) in sociology, and argue in favour of (3) as being that most appropriate for sociology considered as a population science. I give an illustration of (3) in the case of the explanation of 'secondary effects' in social inequalities in educational attainment.

Theory-independent limits on correlations from generalised Bayesian networks

Raymond Lal, Computer Science, Oxford

(Joint work with Joe Henson and Matt Pusey)

Quantum correlations which violate Bell inequalities cannot be

recovered using classical random variables that are assigned to a

certain background causal structure. Moreover, Tsirelson's bound

demonstrates a separation between quantum correlations and those

achievable by an arbitrary no-signalling theory. However, one can

consider causal structures other than that of the usual Bell setup. We

investigate quantum correlations from this more general perspective,

particularly in relation to Pearl's influential work on causality and

probabilistic reasoning, which uses the formalism of Bayesian

networks. We extend this formalism to the setting of generalised

probabilistic theories, and show that the classical d-separation

theorem extends to our setting. We also explore how classical, quantum

and general probabilistic theories separate for other causal

structures; and also when all three sets coincide.

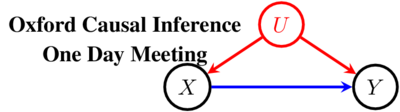

Causal Inference through a Witness Protection Program

Ricardo Silva, Statistical Science, UCL

One of the most fundamental problems in causal inference is the

estimation of a causal effect when variables are confounded. In a

observational study, when no randomized trial is performed, this is

a difficult task as one has no direct evidence all confounders have

been adjusted for. We introduce a novel approach for estimating

causal effects that exploits observational conditional

independencies to suggest "weak" paths in a unknown causal

graph. The widely used faithfulness condition of Spirtes et al. is

relaxed to allow for a varying degree of "path cancellations" that

will imply conditional independencies but do not rule out the

existence of confounding causal paths. The outcome is a posterior

distribution over bounds on the average causal effect via a linear

programming approach and Bayesian inference. We claim this approach

should be used in practice along other default tools in

observational studies.

(Joint work with Robin Evans)